A unique in-vehicle onboarding system. Powered by ML and AR

An advanced IVO application for mobile devices and infotainment systems.

Services

UX/UI design

Android development

Android Automotive development

ML development

Voice assistant development

Technology

Android Automotive OS

Google Car Library

Swift

SwiftUI

CoreML

Vision

Eliminating confusion on the road

Modern vehicles come equipped with many extra control systems. But many people don’t know how to use them – sometimes, they don’t even know they exist. This can be a serious problem.

You may say that it’s the driver’s fault for not reading the official manual provided with the vehicle in full. But those aren’t exactly known for being a fast and digestible read. There has to be a better, more effective way of introducing the driver to the vehicle’s functionality – one that Bamboo Apps has set out to find.

Sometimes, some indicator may light up and the driver will have no idea what it means. Other times the vehicle’s headlights will brighten or dim seemingly at random. This can be very confusing and distracting. While not always threatening, similar occurrences are common among drivers that just got introduced to a new vehicle.

Anatoly Spirkov

Product Owner

An augmented onboarding solution

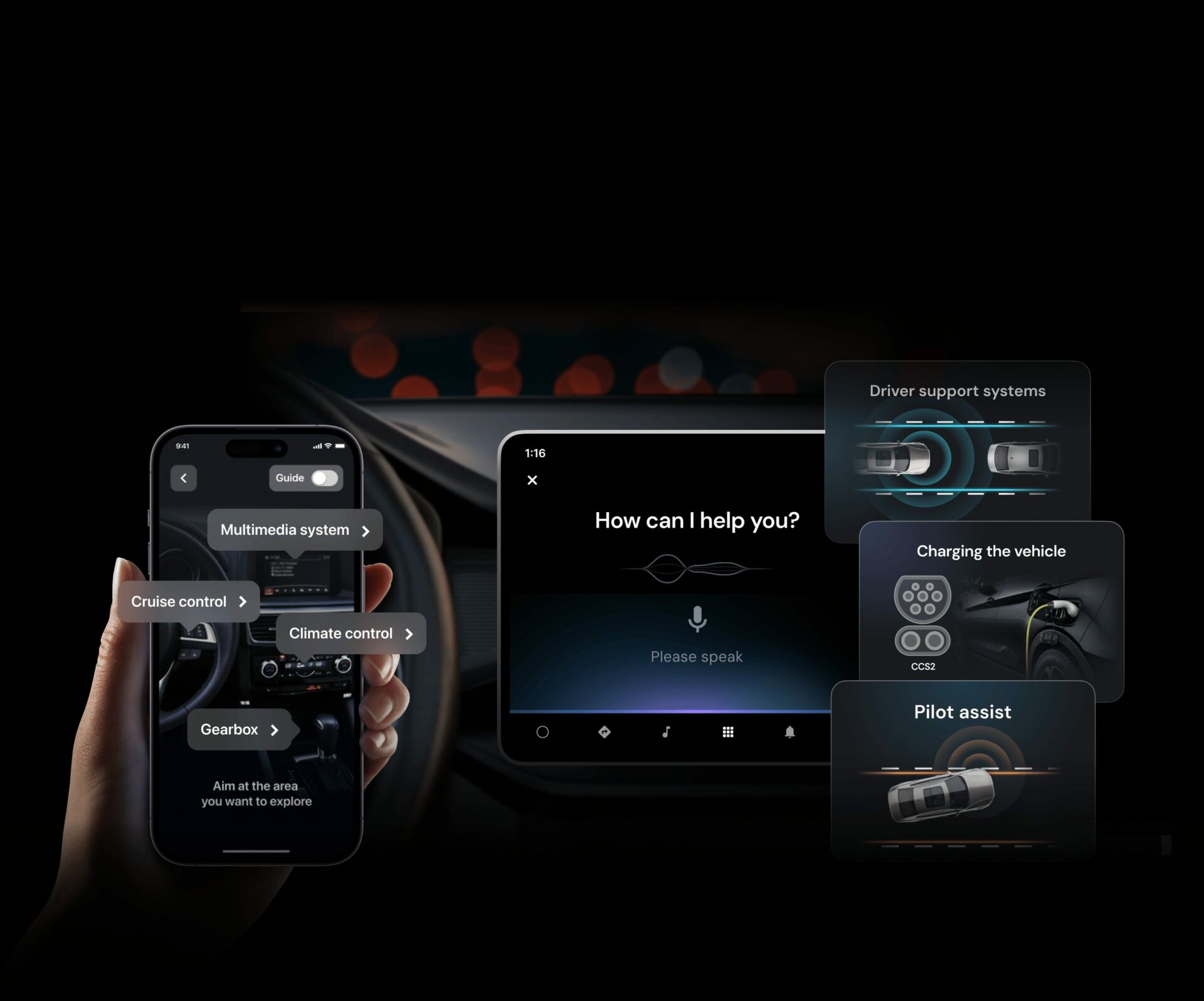

We have designed a smart AR-enhanced in-vehicle onboarding app for mobile devices and infotainment systems. The software is meant to help OEMs, vehicle rental services, and car dealerships acquaint customers with their new vehicles. By streamlining the driver onboarding process, the app improves customer experience, eliminates driver confusion, and increases road safety. The idea is simple.

The app gives the driver an augmented tour of the vehicle’s main and advanced functions by utilising the smartphone camera and a context-sensitive voice assistant. The latter provides relevant explanations based on the driver’s behaviour or the state of the vehicle during a trip. Of course, the customer can also read about the vehicle’s features at their own pace using the simplified text-based manual.

Enhancing the driver onboarding experience

An augmented tour

A typical experience with the app goes something like this. A driver boards a new vehicle and opens the mobile application. They can point the device camera at any part of the car salon – let’s say, they choose the handbrake button. Once the driver aims the camera at it, they get a pop-up with a short rundown of how it works.

The driver can also select the guide option, which will showcase all of the main features in a predetermined order. If the driver is using the in-vehicle infotainment system, the guide comes with a voiceover. But that’s not all the voice assistant is capable of.

Contextual tips

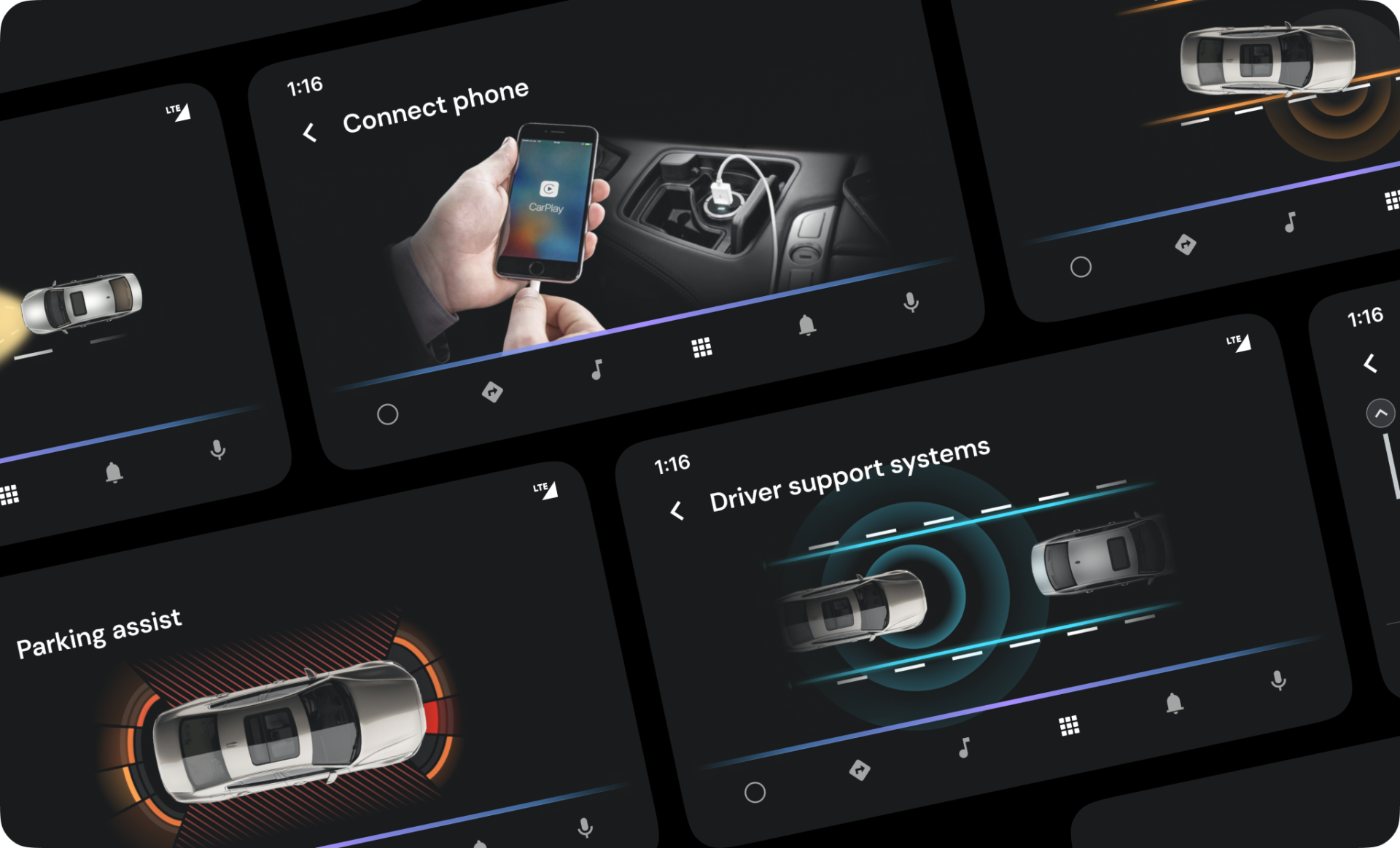

The voice assistant delivers contextual clues to the driver over the course of the trip, based on the state of the vehicle, the environment around it, and the driver’s behaviour.

Essentially, it happens whenever they encounter a situation where a certain function could be useful or when a feature activates automatically for the first time.

This includes:

- Explaining how to connect the phone via CarPlay & Android Auto;

- Explaining how to properly charge your car’s battery;

- Suggesting to use cruise control and explaining how it works when the system deems it appropriate;

- Suggesting to use the parking assistant when the system detects that the driver is about to park;

- Explaining the BSM feature when the system first notices an object in the driver’s blind spot.

The driver can also receive alerts. For instance:

- When the vehicle loses some pressure in one of its tires, the assistant suggests the driver stops the car as soon as they can and checks the wheels. It also points to the nearest maintenance service.

- Similarly, the assistant suggests stopping and checking the car if the system detects an engine problem. In that case, the driver is once again pointed to the nearest repair shop.

- The assistant alerts the driver when the vehicle is low on fuel. It states the type of fuel the vehicle uses, where the tank is located, and how to open it.

- If the trip goes on long enough and the system detects a change in the driver’s behaviour, the voice assistant suggests that they take a rest stop.

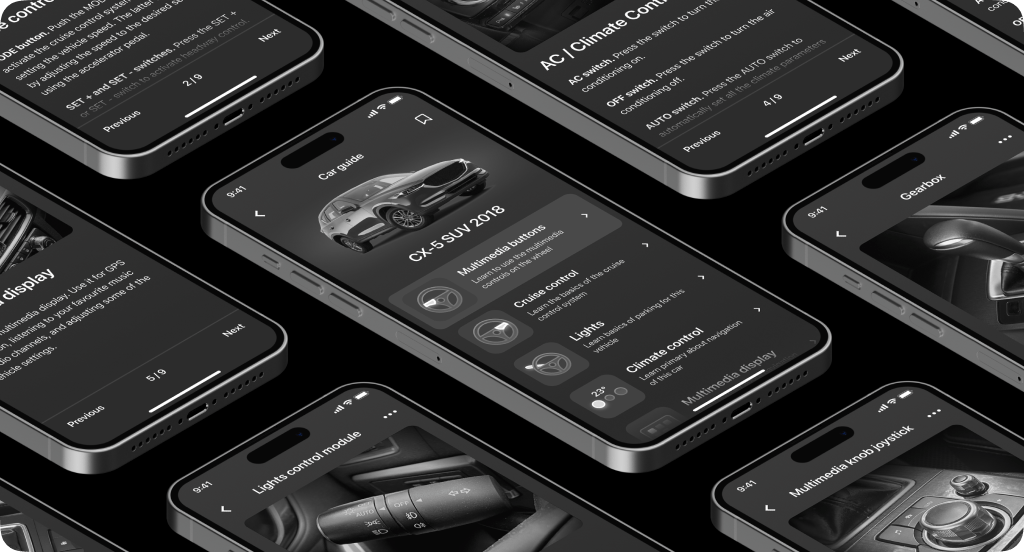

A text-based guide

The driver has the option to read about all of the vehicle’s features in the form of a simplified manual. The information is segmented into small chunks, making it easy to take in and remember. As a result, the driver can learn about their new car before picking it up. It’s very useful for car rentals in particular. Let’s say the driver is travelling to a different country by plane.

They’ve rented a car in advance and they are planning to pick it up at the airport. So instead of wasting their time poking around the salon after they board the vehicle, the driver can simply open the app and learn all about its functionality while still in transit.

Going beyond the limits

The development was done over the course of 2 months by a team consisting of: a UX/UI designer, a Hardware and ML specialist, an iOS developer, an Android Automotive OS developer, a Tech Lead / CTO, and a PM. Like any complex software development project, building the IVO system presented several challenges.

Version restrictions

Another issue was in the fact that not all devices run the full version of the Android Automotive OS. This means some AAOS systems do not have access to the playstore and have a limited pool of features. So while the IVO system could be emulated using a full version of AAOS, the demo device only ran a restricted one.

This meant we had to compromise on some design decisions. For example, the restricted version didn’t have any voice recognition features available. After a fruitless search for voice processing tools, drivers, and libraries we could possibly implement, we’ve decided to keep the voice guide in the form of automatic audio messages.

Machine learning

Fully implementing the machine learning technology was a challenge in its own right. A lot of effort went into preparing the dataset, as well as the process of developing and teaching the ML model.

Android Automotive OS support

The lack of large community support and up-to-date documentation about Android Automotive OS made the initial development much harder. The official manuals for the OS were quite old, so not all of the information provided in them was still relevant or very well organised.

Google guidelines

Google is very meticulous about what can and cannot go into an automotive app. This is understandable, of course, as no interface elements or features should distract the driver from the road. That’s why Google recommends using their special Car Library to develop UIs.

Emulation and CAN-bus

We had to use a Raspberry Pi4 with a 10-inch display running AAOS 11. Installing the system on both devices took some work, but in the end, it was a success. Finally, to emulate a CAN-bus, the engineers have combined a Raspberry 3 tablet and a CAN board into a single system with some additional coding.

A grand reveal

The Bamboo Apps team has successfully developed a demo of the IVO system. The software was presented for the first time at GITEX 2022, UAE, Dubai, on 10-14 Oct.